Results: Can an algorithm stop suicides by spotting the signs of despair?

Published on 11/30/2018

QUESTIONS

GO to COMMENTS

Comments

1.

1.

A few days after the death of Anthony Bourdain last summer, a suicide researcher at the Royal Mental Health Centre in Ottawa downloaded four years of public tweets by @Bourdain onto the computer in his office. Zachary Kaminsky was certainly not the only one studying Bourdain's personal tweets for clues to why the the famed chef, author and television travel personality had taken his own life. But he had a unique tool – a computer algorithm designed to identify and sort tweets by characteristics such as hopelessness or loneliness to reveal a pattern only a machine could find. In simple terms, the algorithm, still in the early trial stage, charts the mood of the person tweeting in real time, with the goal of using that information to peer into the future. The idea being tested by Dr. Kaminsky, and researchers around the world, is whether artificial intelligence can predict suicide risk in time to intervene. Do you think that this may be a useful tool in early intervention and prevention of suicides?

Yes, it sounds promising

36%

880 votes

Not sure

50%

1203 votes

No

14%

338 votes

2.

2.

Everybody thinking about suicide is in distress and needs help. The key is to identify and decrease the risk factors based on the individual patient. It is important for people to understand that "suicide is preventable and there is hope." A tool is only helpful, if clinicians are still able to question its results against the actual person sitting before them. And even then, a tool is only useful if the health-care system has the resources to provide good care for the patients in question – not only when they arrive in crisis, but, ideally, early enough to prevent them from reaching that crisis in the first place. Possibly, if an algorithm could help flag and identify these risk factors more efficiently, the person in distress could have a better chance for a positive outcome. Do you think that all aspects of the medical and education community plus technology should work together to identify these persons at risk?

Yes

41%

999 votes

Maybe

46%

1111 votes

No

13%

311 votes

3.

3.

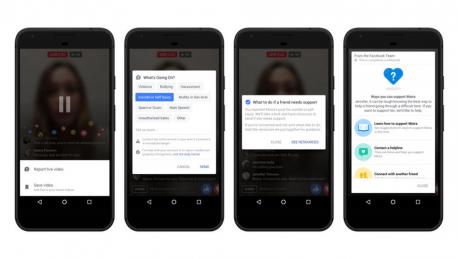

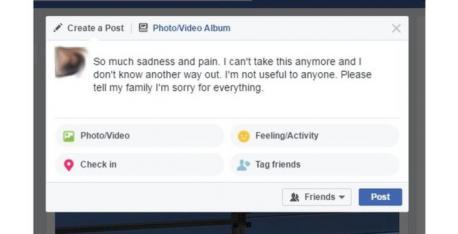

Facebook has been using artificial intelligence since November, 2017, to locate phrases that may be signs of distress – for instance, one user asking another, "Are you okay?" – to send pop-up ads about suicide hotlines or highlight ways people can respond when they are worried about someone, by prompting them to ask certain questions, report the incident to Facebook or call for help themselves. The approach is meant to provide support, not predict individual behaviour. But in extreme circumstances where harm appears imminent, Facebook moderators have contacted emergency services. Do you see this as a helpful tool, or an infringement on your privacy?

A helpful tool

20%

489 votes

Infringement of privacy

15%

352 votes

Bit of both, but if it can prevent a suicide, it's needed

40%

965 votes

Not sure

25%

615 votes

COMMENTS